Stop Stitching Together Market Survey Data for Salary Benchmarking

Why traditional compensation surveys force comp teams to manually patch salary data — and how decision-ready benchmarking helps teams build pay ranges faster, with less risk and rework.If you've ever opened a traditional compensation survey export and thought, "what am I actually supposed to do with this?", you're not alone.

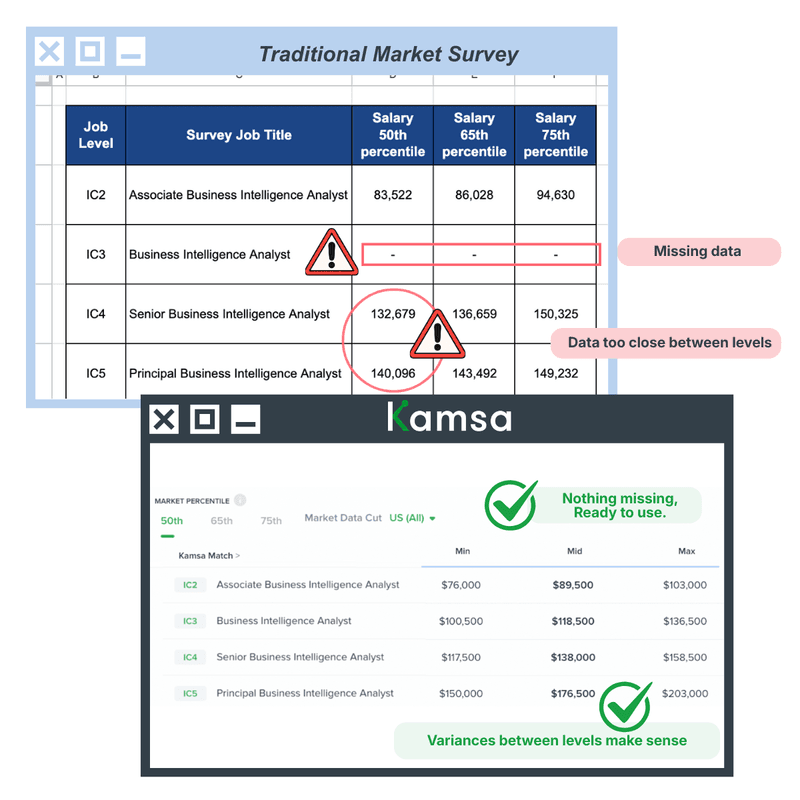

The data comes back and immediately something's off. An IC4 benchmarking lower than IC3 in the same job family. Missing levels. Midpoints so close together they're basically the same number. And meanwhile, you've got offers to price and a leader asking for a "quick" answer by end of day.

So you start patching. You smooth a midpoint here, adjust level spacing there, and add judgment calls. Eventually you end up with a pay structure that technically works, but only because you personally remember which parts were held together with duct tape. That's not a process. That's institutional knowledge waiting to walk out the door.

The core problem is that most survey data wasn't built around your job architecture, your leveling definitions, or where your company actually is in its growth stage. So turning it into something usable requires a layer of manual cleanup that nobody budgets for and nobody enjoys.

This is especially true at startups, where there usually isn't a team of comp analysts to absorb that work. You need something repeatable - data you can pull next comp review cycle without starting from scratch.

That's the problem Kamsa was built to solve. The idea is straightforward: comp data should be decision-ready when you get it, so you're building ranges instead of rebuilding the dataset, trusting the level progression instead of explaining away the gaps, and spending less time defending the numbers and more time making confident pay decisions.